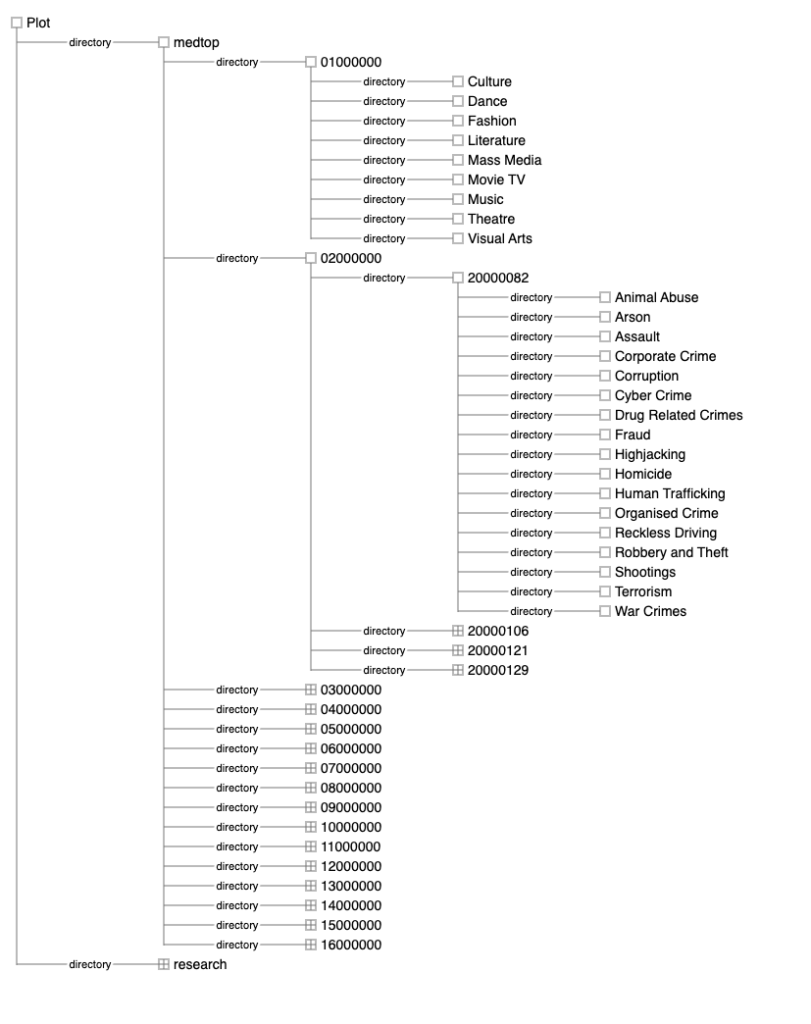

1. IPTC Media Topic Codes – Classification codes used to label the data based on what category the news article would fall under.

- In order to develop queries, IPTC categories were studied and comprehended.

2. Develop Queries

- Study IPTC

- develop and test queries against Solr

- place queries into a tree structure with its name, category, description, etc.

3. Word Embeddings – a real-valued vector that encodes the meaning of a word in a way that the words that are closer in the vector space are expected to be similar in meaning.

a. Words extracted are “stemmed” and “sorted” into a list representing the article title and summary

Shell script – prepares the data for training ($ ./load_datasets.sh -cbrlvs)

load_database.sh

while getopts q:d:cbrlvxsuh name

do

case $name in

q) query_filter="$OPTARG";;

d) directory_filter="$OPTARG";;

c) clean;;

b) run_queries;;

r) rollup;;

l) labels;;

v) make_tsv;;

x) classify;;

s) sync_with_aws;;

?|u|h) printf "Usage: %s -qdcbrlvsuh: ####\n\

[ -q'*.query.txt' ] => query file pattern to build\n\

[ -d../../workspace/medtop ] => directory to process\n\

[ -c ] => clean\n\

[ -b ] => build\n\

[ -r ] => rollup\n\

[ -l ] => create labels\n\

[ -v ] => create TSV files\n\

[ -u ] => Usage\n

[ -h ] => Usage\n

[ -s ] => sync with AWS S3 bucket\n"

exit 2;;

esac

done

b. The model is trained with vectors created from the Binary representation of words encoded into a vector

Python program – fit the new model ($ python train.py)

Current Neural Network Model design from train.py: (create)

def create_model():

return tf.keras.models.Sequential([

tf.keras.layers.Dense(30, input_shape=(20, 200)),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(1024, "relu"),

tf.keras.layers.Dense(20, activation='sigmoid')

])

Neural Network Model design from train.py: (optimize)

opt = tf.keras.optimizers.SGD(learning_rate=0.1)

model = create_model()

model.compile(optimizer=opt,

loss=tf.keras.losses.MeanSquaredError(),

metrics=['accuracy'])

c. The word embeddings are then combined into the data frame consisting of one embedding for each extracted term

d. Up to 20 terms are extracted and each term has its own embedding vector of length 200 floating point numbers.

e. Initial implementation used simple binary conversion to create a vector with length 200.

4. Fit the model

The following is obtained in order to fit the model with results from queries and other enhanced data:

- fingerprint: an array of word (term) embeddings. i.e. [embedding for term1, embedding for term2, …, embedding for termN] (padded to length 20)

- article_print: the result of classification used for searching (compressed format for indexing in Solr)

- article_confidence: the result of the Tensorflow prediction of how well the system did to identify the IPTC

Leave a Reply